SST, short for Statistical Software Tools, performs a large (and

ever expanding) variety of statistical functions. These functions

include the entering, editing, transforming, and recoding of data that

every statistical package must have. Beyond data manipulation and

frequently used statistical procedures (such as regression analysis), SST

is geared toward the estimation of complicated statistical models. It is

SST's ability to handle difficult estimation problems -- and handle them

relatively quickly -- that distinguishes it from its competitors.

The purpose of this tutorial is to give the new user a short guided

tour through SST. We do not attempt to cover any advanced features

here, but after spending twenty or thirty minutes with the tutorial, you

should be able to run regressions using SST and understand the flavor

of the program.

A bit of background. SST was the idea of two econometricians at

the California Institute of Technology, Jeff Dubin and Doug Rivers.

The bulk of the programming was carried out by a group of present

and former Caltech students (Bob Lord, Richard Murray, Steve Beccue,

Dave Agabra, Carl Lydick, Dave Beccue, and others). SST, however, is

not supported by or in any way affiliated with the California Institute

of Technology. SST was designed with four primary considerations in

mind:

-

It should be easy to use. The target audience, of course, was not

a novice user, but the program should not be difficult to learn or,

once learned, hard to use. We decided to make the program

command-driven. Understanding of a few simple commands is

enough to get you started. Later, new commands can be learned to

perform more difficult tasks.

-

The program should be able to handle the sort of problems that

frequently arise in our own research. Many microcomputer

statistical packages, we found, could not handle the size datasets

that our work requires -- the size datasets that many social scientists

would expect a mainframe statistical package to handle without

difficulty. Moreover, few mainframe packages were programmed

for the estimation problems that we often encounter (multinomial

logit, general maximum likelihood estimation, regression diagnostics,

etc.).

-

Microcomputer programs that could handle datasets as large as

our work required often turned out to be unbearably slow.

Whenever we added a procedure, we reworked the code many times

(often rewriting large sections in assembly language) until we

achieved reasonable speed. To us, reasonable means faster

turnaround time than we would get time-sharing on a

superminicomputer. To our programmers, the quest for speed must

have sometimes seemed like the holy grail.

-

We like to compute on different machines and under different

operating systems. One of us is a UNIX fan and insisted on being

able to run SST under UNIX. SST will run on any MS-DOS system

and any UNIX system -- with no changes in syntax. If you want to

run SST on some other computer, we can probably supply you with a version

of SST that will work on that computer if we can find a

satisfactory C compiler for that computer.

If SST meets these four goals -- simple to learn and use commands,

powerful estimation capabilities, speedy operation, and complete

portability and compatibility of versions between different operating

systems, then we think our effort will have been successful. This does

not mean that SST will satisfy everyone's statistical needs. We wrote

it to satisfy our own needs, not some nonexistent general user. But if

your needs are at all like our own, then we think you will be satisfied

with the result.

SST can be run in either interactive

or batch mode. Interactive mode seems easiest -- at first. When you

make a mistake, SST informs you and you can make corrections

immediately. For complex tasks involving many commands, batch files can

be more efficient. Later we will show you some of SST's unique features

which enable you to combine the best features of each mode --

interaction with the program with less typing than a purely interactive

approach would require.

We will start with SST initially in interactive mode. To invoke SST

from MS-DOS, issue the command:

C>sst

SST loads quickly (under five seconds on an IBM PC/AT). You will be

greeted with the following response:

SST - Statistical Software Tools - Version 1.0

Copyright 1985,1986 by J.A. Dubin and R.D. Rivers

SST1>

The string SST1> (not shown in the examples below) is the

SST prompt. Whenever it

appears, SST is ready to receive your commands. SST doesn't need to

know the size of your datasets, but you can save the program some work

if you tell it the maximum number of observations (or cases) that you

expect to be working with. We will initially specify a limit of one

hundred observations:

range obs[1-100]

The RANGE statement doesn't commit us to 100 observations. We can

always issue a new RANGE statement increasing the number of

observations, but by limiting the sample this way we tell SST not to waste

its time (and ours) by worrying about data vectors longer than 100

observations. Failure to issue the range statement will slow processing and

may lead to out of memory conditions.

The RANGE statement also illustrates the standard format of SST

commands. Each SST command has a name, such as `range', which should be

typed first. The command is modified by subops (in this case, the

OBS subop informs SST about which observations are active). Most

subops take arguments, enclosed with square brackets []. The OBS

subop, for example, takes a list of observations as its argument (in the

above example 1-100 or, equivalently if you really like to type,

1 2 3-8 9 10 11-99 100). There are also subops which don't take

arguments. One that works with nearly every command is the TIME

subop. Including the TIME subop in the RANGE command, as

follows:

range obs[1-100] time

would cause SST to print the elapsed time between the time it received a

command and it finished executing it. (If you want a thrill, try timing a

statistical procedure using your old statistical package and then try the

same thing with SST using the TIME subop.)

For a listing of available SST commands, type:

help

and SST responds with a complete list of command names. If you don't

know or remember the syntax of an SST command, type `help' followed by

the command name. For example:

help range

produces a summary of the syntax of the range statement:

RANGE {OBS[observation list]�2 {IF[expression]�2 {TIME�2

Keywords are printed in upper case. SST doesn't care whether you type

keywords in upper case or lower case, but you may not abbreviate or

misspell the keywords. Optional subops are enclosed in braces (`{' and

`}'). Don't type the braces (they have a special meaning in SST).

Arguments to subops are described in lower case letters. For example, the

syntax summary reminds you that the OBS subop takes

a list of observations

as its argument. If you need more information about a command beyond that

provided by the HELP command, consult the SST User's Guide or

Reference Manual.

Congratulations. If you have followed up to this point, you have

successfully executed some SST commands. Of course, nothing useful has been

accomplished yet. Now, we're ready to get down to work.

The simplest way to enter data is to

create a text file containing your data using either a word processor (such

as WordStar) or an editor (the IBM-PC comes equipped with a rudimentary

editor called EDLIN). If you create a data file this way, make sure that

your word processor does not include hidden control characters in the file.

Numbers in the file should be separated by blanks, commas, or newline

characters (carriage returns). If you are accustomed to working with

large datasets on tape, you may feel more comfortable with fixed formats,

where each variable occupies a particular column position. SST can read

data in this form using a Fortran style format specification, but we will

not discuss it here. See the SST User's Guide for details.

There are two basic ways to organize data in a text file. If you have ten

observations on five variables, one way is to first list the values for

each of the five variables for the first observation, followed by the

values of the five variables for the second observation, and so forth. This

is called listing by observation, and is the default in SST.

The other way is to first list all ten

observations for the first variable, followed by all ten observations for

the second variable, and so forth. This way is called listing

by variable or,

naturally enough, BYVAR in SST.

The last thing to do before giving SST your data file is to think up names

for your variables. SST names are limited to a maximum of eight characters

and can be composed of alphabetic characters, digits, and the underscore

character (`_'). For illustration, suppose the file data.raw

contains the following numbers:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

(Not a very interesting dataset, but it will do for now.) Further, suppose

that these data represent five observations on each of three variables and

are organized by observation. We have chosen the rather uninspired names

var1, var2, and var3 for the variables. The following

command would have SST read the data:

read to[var1 var2 var3] file[data.raw]

The TO subop tells SST to create three variables with the assigned

names and then to read data on these variables from the file specified in

the FILE subop. Note that variable names in the TO subop were

separated by spaces. We could just as well have used commas to separate the

variable names. SST now has the following data in memory:

obsno var1 var2 var3

1 1 2 3

2 4 5 6

3 7 8 9

4 10 11 12

5 13 14 15

You did not create the variable obsno (SST did this automatically), but

you can refer to it just like any other variable.

If we had told SST instead that the data were organized by

variable:

read to[var1 var2 var3] file[data.raw] byvar

then SST would have the following data in memory:

obsno var1 var2 var3

1 1 6 11

2 2 7 12

3 3 8 13

4 4 9 14

5 5 10 15

The BYVAR subop does not take any arguments.

Now that your data is in SST, you may want to save it in a form that will

allow you to skip the READ command when you use the data again. SST has

its own compact format for saving data files. These files have the

advantage that they can be read very quickly and that all information about

a variable (labels, missing values, date last modified) is stored with the

data values in an SST system file. If you give the command:

save file[olddata]

SST will save var1, var2, and var3 in the file newdata.sav. Note

that SST

automatically adds the extension `.sav' to system files, unless you

specify some other extension in the FILE subop. If, for some

reason, you only want to save variables selectively, add the VAR

subop specifying which variables are to be saved:

save file[newdata] var[var1 var2]

The file newdata.sav would differ from olddata.sav only in that

var3 was excluded from the former and included in the latter.

Saving data does not remove that data from memory. It is still available

for use during the current session. It is also a simple matter to reenter

saved data into SST. Just issue the command

LOAD:

load file[newdata]

As with the SAVE command, the FILE subop of the LOAD

command will assume the file extension `.sav' unless some other extension is

specified. The SST system file newdata.sav contains

five observations on

each of the variables var1 and var2 which would again be available for data

analysis.

There are other ways to enter data into SST. The ENTER command

(described in the User's Guide) is convenient for entering small amounts of

data from the keyboard. SST can also interchange data from other programs

such as dBASE II and VisiCalc by simply specifying the format of the file

to be read (DB2 or DIF). This feature is covered in more detail in the

User's Guide.

Once your data has been read, you will probably want to make some

adjustments to it. For example, one variable (faminc) might be the

income of families in a sample of households, but the relevant variable

might be the logarithm of family income. We can use the SET statement to

create a new variable loginc:

set loginc = log(faminc)

SST has a wide variety of functions including, as we will explain below,

ones you define yourself. A few of the most commonly used functions

are:

log() natural logarithm

exp() exponentiation

sqrt() square roots

abs() absolute value

cumnorm() cumulative normal probabilities

phi() normal probability density function

bvnorm() bivariate normal probabilities

SST also can perform all the standard arithmetic operations. For example:

set z = (x-y)\^3 / sqrt(x*y)

would be equivalent to:

(x - y)^3

z = ---------

sqrt(xy)

The rules of precedence for evaluating complex expressions are

standard, but if you have any doubts about what SST will do, add

extra parentheses to be on the safe side.

It is also possible to perform conditional transformations. For

example, suppose our dataset has three variables: hinc (husband's

income), winc (wife's income), and head (a dummy variable which

takes the value 1 if the wife is classified as head of household and the

value 0 if the husband is classified as the head of household). To

form a new variable which is the income of the head of household, we

could issue two set statements:

set headinc = winc; if[head==1]

set headinc = hinc; if[head==0]

We have introduced a new subop, IF, which controls which

observations the command will be applied to. The argument to the IF

subop is a logical expression, which can either be true or false. If the

expression in the IF subop is true for a particular observation in

the active sample range, then the set statement will be performed for that

observation; otherwise that observation will be skipped.

Note in the above SET statement that the transformation was set off

from the IF subop with a semicolon (`;'). The semicolon is required

if some additional subops appear in the command, but otherwise may be

omitted.

Logical expressions in the IF subop can use any of the following

relational operators:

== equals to

> greater than

>= greater than or equals to

< less than

<= less than or equals to

!= not equals to

Logical expressions can also be made fairly complex by using some

additional operators. Any of the standard arithmetic operators can be

used in logical expressions, as well as the following logical operators:

& and

| or (nonexclusive)

! negation

For example, to set faminc equal to the combined income of the

husband and wife only for those families with a male head and

combined income of less than $25,000:

set faminc = hinc+winc; if[(head==1) & (hinc+winc$<$25000)]

Also, if the IF subop does not contain a relational operator, the

logical expression is evaluated numerically and values of one are

interpreted as being true. Thus, since head is a dummy variable

(taking values of one and zero), the following SET statement will

work on only the one values (female head):

set faminc = winc; if[head]

while the following SET statement will only work on the zero

values (male head):

set faminc = hinc; if[!head]

This feature means that the IF subop can be used like the Boolean

subop found in some other statistical packages.

It is also possible to modify the operation of the SET statement

with the OBS subop, which restricts the range of observations on

which the operation will be performed. For example, to transform only the

first ten observations:

set loginc = log(faminc); obs[1-10]

The OBS subop can be combined with the IF subop if further

control over the range of the transformation is desired. The OBS and

IF subops only modify the active sample range for the particular command

they are issued with. Afterwards, the sample range returns to whatever it

was previously (as determined by the RANGE statement, if issued).

If a particular transformation is going to be used over and over again, it

is simpler to define a function which will perform this transformation

using the DEFINE statement. Sociologists frequently "standardize"

their data to have mean zero and variance one, and the resulting variable

is sometimes called a z-score. If you do this often, it is probably

worthwhile to define a z-score function:

define zscore(x,meanx,varx) = (x - meanx)/sqrt(varx)

In the above expression, x, meanx, and varx are

parameters that the user can supply when needed, rather than existing

variables in memory. Later, to standardize a variable y which has

mean -5.33 and variance 8.85, we could issue the following set statement:

set zy = zscore(y,-5.33,8.85)

Then zy would be the standardized version of y.

SST also has the ability to recode data. The RECODE command uses the

MAP subop to provide a list of values of the old variable which will

be recoded into a new variable. Sometimes, for example, we might want to

have a dummy variable coded +1 and -1 instead of 1 and 0. We could recode

the variable head by issuing a SET statement:

set newhead = -1; if[head==0]

The variable newhead equals 1 if the wife is head of household and

-1 if the husband is head of household. The same task could be accomplished

using the RECODE command:

recode var[head] map[1=1 0=-1] to[newhead]

Three new subops have been encountered in the RECODE command. The

VAR subop takes a list of one or more variables which will be

operated upon. The TO subop takes a list of one or more

variables which will be created by the procedure. In the above example,

both the VAR and TO subops were given only one variable, but

it would be possible to recode several variables simultaneously. The number

of variables specified in the VAR subop must always equal the number of

variables specified in the TO subop. The MAP subop in the

example

tells SST how to recode the variable head into the new variable

newhead. When head equals 1, newhead will equal 0,

and when head equals 0, newhead will equal -1. Actually, the

assignment of the value 1 to newhead when head equals 1 is

redundant, since values of head which are not specified in the MAP

subop are automatically written to the new variable. Thus the last

RECODE statement is equivalent to:

recode var[head] map[0 = -1] to[newhead]

The TO subop is optional in the RECODE statement. If

the TO subop is omitted, the new variable is written over the old

variable. When this is done, the old data is lost.

We now assume that data has been read into

memory and whatever transformations that you desired have been performed.

To check which variables are stored in memory, try the LIST command:

list

LIST can be used with some subops to obtain different information,

but we will not discuss them here. We will assume that variables x,

y, and z are now stored in memory. It is always a good

practice to examine the data carefully to see that the data are what you

think they should be. Descriptive statistics can be calculated using the

COVA command:

cova var[x y z]

COVA now prints the means, standard deviations, minimum and maximum

values of each variable in the VAR subop. It is possible to

restrict the amount of output that COVA prints by specifying one or

more of the following subops:

MEAN STD MIN MAX COV

If any of these subops is included with the COVA statement, only the

requested information will be printed. To get only the means and standard

deviations of the variables x and y, give the command:

cova var[x y] mean std

Using the COV subop will display the correlation/covariance matrix.

The correlation/covariance matrix contains variances of the variables on

the diagonal, correlations above the diagonal, and covariances below the

diagonal.

Some users find typing subop names tedious and prefer to have SST prompt

them for subop arguments and options. If you type a command such as

COVA which requires one or more subops, SST will prompt you for missing

subops. For example, type:

cova

and SST will respond:

VAR[]:

You now type the list of variables for which you desire descriptive

statistics:

VAR[]: x y

In using subop prompting you do not enclose subop arguments in brackets.

Since VAR is the only required subop for the COVA command,

SST now asks you for a list of options:

OPTIONS: mean std

where the response mean std was supplied by the user. Subops entered in

response to the OPTIONS prompt should be written out in full, e.g.

OPTIONS: coef[beta] covmat[cov] pred[xbeta]

You may also want a scatterplot of the data. Currently SST supports plots

in both character and graphics mode, depending upon how you have your

system equipped. (See the Installation Instructions for details on how

to configure your system.) Character plotting is rather crude since the

limited resolution of the screen does not permit plotting more than one or

two hundred data points. In graphics mode, however, SST scatterplots are

feasible for large datasets.

To obtain a simple scatterplot of x and y, with x on

the horizontal axis and y on the vertical axis, give the following

command:

scat var[y x]

The VAR subop specifies which variables to plot with the first

variable defining the horizontal axis and the second variable defining

vertical axis. (In fact, the SCAT command has quite a few

variations. See the SST User's Guide for further details.)

Regression analysis is the most commonly used procedure in applied

econometrics. Unfortunately, although most statistical packages allow users

to run regressions relatively painlessly, few allow the user much

interaction with the data as he or she is running the regressions. SST

includes several useful diagnostic tools that should enable users to

have a better understanding of the nature and quality of estimated

regression equations.

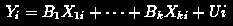

A linear regression equation takes the form:

where i denotes observations, Y is the dependent

variable, X_1, ..., X_k are the independent variables, and Ui is an

unobserved error. Usually, one of the independent variables

is constant (e.g., X_1i = 1 for all i). In SST if you want a

constant term, you will have to create one and include it in the equation:

set one=1

Note that one pitfall of the SET statement is that if no RANGE statement is

in effect, the above command will create a vector of 8000 one's, which is

time consuming and wastes memory. At this point we can regress y on

x, z, and a constant:

reg dep[y] ind[one x z]

SST will print out ordinary least squares estimates of this equation along

with standard errors, t-statistics, and the R^2.

The power of the REG command, however, is not illustrated by this

simple example. If a regression is worth running, it is also worth the

time to check for patterns in the residuals. SST allows users to save

residuals and predicted values with the subops RSD and PRED :

reg dep[y] ind[one x z] pred[yhat] rsd[u]

The subops PRED and RSD take as their argument a variable

name in which the predicted values and residuals, respectively, will be

stored. Once created, the variables yhat and u

can be printed, plotted,

and otherwise manipulated like any other variable.

Two other useful diagnostics, suggested by Belsey, Kuh, and Welch in their

book, Regression Diagnostics (Wiley, 1980), are available in SST.

Studentized residuals (residuals divided by their estimated standard

deviations) can be obtained by including the SRSD subop, which takes

a variable name as an argument. The diagonal elements of the hat matrix

can be obtained by including the HAT subop, which also takes a

variable name as an argument. Large values (positive or negative) of the

studentized residuals indicate outliers, while large values of the hat

matrix diagonal elements indicate observations with high leverage (i.e.,

the estimated regression will be sensitive to the deletion of these

observations).

It is often informative to rerun a regression after deleting observations

which are either outliers or high leverage points. First, we might run the

above regression and save the studentized residuals in a variable called

srsd1 and the hat values in a variable called hat1:

reg dep[y] ind[one x z] srsd[srsd1] hat[hat1]

Next, we could delete observations for which the studentized residuals

are greater than two in absolute value or the corresponding hat element

is greater than two:

reg dep[y] ind[one x z] if[(abs(srsd1) $<$ 2) $|$ (hat $<$ 2)]

The IF statement modifies the sample range used to calculate the

regression. Only observations for which the expression inside the If subop

is true will be used for estimation.

The estimation range for the REG command can also be modified using

the OBS subop. For example, we may want to check for possible

nonlinearities or interactions by splitting the sample depending on whether

z was greater than or less than some value (say 3.5):

reg dep[y] ind[one x z] if[z > 3.5]

reg dep[y] ind[one x z] if[z <= 3.4]

The above two REG commands would produce two regressions

corresponding to the division of the sample by values of z.

If you want to end the SST session now, issue the command:

quit

and SST returns you to the operating system. The QUIT command is

very simple; it takes no subops.

Operating SST in interactive mode sometimes seems to require too much

typing. If an operation needs to be repeated with minor modification, we

would rather not have to retype the entire command. One way to lessen the

typing required is to create a batch file with a word processor or editor.

Your word processor allows you to duplicate lines, making small changes as

necessary, and to create large files of commands relatively quickly.

If you have created a file batch.cmd with SST commands, you

can run it by invoking SST in the following way:

C$>$sst batch

Note that SST assumes an extension of `.cmd' for command files, unless some

other extension is specified. You can then watch your commands and

SST output roll quickly down your screen (probably too quickly to

read). You can save the output for later viewing by redirecting it to a

file, say batch.out:

C$>$sst batch $>$batch.out

All this is a little awkward and greatly cuts down on helpful

interaction with the program. There is a better way.

The first thing to realize is that editing batch files and running

SST need not be distinct processes. If you are using WordStar, for

example, and WordStar can be accessed from your current directory,

give the command:

sys ws

and, presto, you are in WordStar while everything in SST remains

undisturbed. When you exit WordStar, the SST prompt will reappear and you

can continue your SST session. The SYS command can be used to

execute most programs from DOS. To obtain a listing of your current

directory, for example, just give the command:

sys dir

and a directory returns on screen before returning you to SST.

If you created a batch file of SST commands while you were temporarily

using your editor, these can now be executed using the RUN command:

run batch

The SST commands in the file batch.cmd will be executed. When SST has

finished executing the batch file, the SST prompt will reappear and SST is

ready to accept new commands.

During an SST session, you may want to save a record of your work. For

this, the SPOOL command is handy:

spool file[sst.out]

The SPOOL command, as issued above, causes a copy of the session to

be saved in the file specified in the FILE subop (in this case,

sst.out). To turn spooling off, give the command:

spool off

and all SPOOL files are closed. Type the SPOOL command by

itself, and SST reminds you which spool files you have open:

spool

No spool files open

The SPOOL command also enables you to create SST batch files

without using your word processor. If the subop CMD is added to the

SPOOL command, only commands are saved to the specified file. If the

subop OUT is added to the SPOOL command, only the output of

executed commands is saved to the specified file. It is possible to have two

SPOOL files open at once: one for commands, the other for output:

spool cmd file[file1.cmd]

spool out file[file2.out]

The file file1.cmd would get your commands while the file

file2.out would get the output produced by SST. This is useful

because the file generated by SPOOL CMD is executable by SST.

You can rerun the

session by, first, closing the CMD file:

spool cmd off

You may want to close the OUT file too. The command:

spool off

would close both files, while the command:

spool out off

would only close the OUT file. Next, give the command:

run file1

and SST runs the file file1.cmd, giving you a rerun of the session!

Of course, you may want to make some minor modifications to the

CMD file, which can be done using your word processor in

conjunction with the SYS command. Thus, SST can provide a complete,

integrated environment for all your statistical work.